to Raita ... may your photographic journey be fun

Today I'd like to put a quick tutorial on how to mount and use an Olympus OM lens on an EOS camera.

This is essentially done using a small metal adaptor which mimics the camera side for the lens being used, and then mimics the lens side of an EOS lens so that it can be put on the camera.

Now, EOS lenses are fully electronic, meaning that all of the control of the lens by the camera is done by an electronic interface (you can see this on the camera when you take a lens off as a bunch of little golden buttons).

Since the OM lens is fully mechanical it has no capacity for this sort of thing.

Now, keep in mind that lenses are essentially simple creatures, despite all the mystery that modern lenses seem to have, they focus and they have an aperture iris (which is just like the iris of your eye, and gets smaller to allow less light through to the film / sensor).

Quite simple really.

Ok, firstly lets cover mounting the adaptor onto the lens. Most camera systems have some red dot on the body and on the lens to guide you as to exactly where to hold them to orient them bring them together and lock ... so match the dots, bring the two together and turn till it clicks

So, now the lens is fitted to the adaptor, its ready to be used. You can check that it operates and you can see the iris stop down as you change the aperture control ring on the camera (remember, its not electronic-magic, its mechanical)

Remember, it doesn't just get dark as you stop down, the depth of field (what in and out of focus) gets wider ... so if you stop down with the lens on the camera focused on something you may see this in the viewfinder (I say may, because some people can't get past seeing that it just gets darker)

Now, as we see, adjusting the aperture actually stops down the iris (makes it smaller). On modern cameras (electronic or mechanical) we have a cunning system which would allow the lens to be fully open (making it bright to see, and easier to focus) and stopping it down just as you press the shutter. Of course the EOS camera has no such mechanical control, so the adaptor simply keeps that lever pulled and stops the lens down to exactly what f-stop you've set on the lens.

Now, lets put it onto the EOS camera ...

So because this lens now works in a pre-automated way (you know, things weren't always automatic...) it means that as you stop down the viewfinder will get darker. This will confuse your electronic camera which does not know that

- it can't control the lens

- what aperture the lens is set to

so you need to tell the camera that the lens is wide open. This is because the camera will only be able to control the shutter. Sure, you're making it darker by stopping the iris down, but the camera doesn't know that.

Depending on the camera you may need to tell the camera that there the lens attached is out of its control or you may not. You can tell by seeing how the camera reacts to having the lens mounted.

Keep it simple: use Av mode

First, lets keep this simple by putting your camera in Av mode. Now I picked that because in Av the camera does what its told regarding to aperture and picks the right shutter speed (lets assume it gets thing right ;-) for the aperture you have chosen. We will be picking the aperture (set on the lens) only this time the camera just won't know what its going to be.

Now just before you rush off, we need to check something (though if your camera isn't an old EOS you may be in the clear), there are two different behaviors for EOS cameras that are fitted with a mechanical lens:

If the display reads “1.0” (or any number other than “00”) then you have the old stop-down metering style.

If the display reads “00” then you have the new stop-down metering style.

I think its quite likely that you will have "00" showing with the Olympus on your camera. Anyway this is covered at this link on EOS stop down metering. Recommended reading for a rainy day ... when you can't be taking pictures.

Ok, on my EOS camera I need to manually set the aperture in Av mode to 1.0, be sure to check yours.

So, I can only use my camera in Av mode or Manual (but I have to remember not to change the aperture). The newer EOS cameras (and I think all the EOS digital cameras) use the "new method" which allows your camera to work in:

- P (program),

- Av (aperture priority) and

- M (manual).

Check your cameras "stop down" behavior and if you need to set it, set it, but probably you will not need to worry about anything.

So, just set Av and go take some pictures.

Ohh ... and don't forget to focus :-)

One of the reasons AutoFocus cameras have become so common is that most people completely forget to do this ... in the heat of the moment. Manual focus offers you control but with that comes responsibility ... you can't blame the camera for a blurry photo if you didn't focus.

Many cameras have diopter adjustment, which compensates for if you need glasses or not.

Many cameras have diopter adjustment, which compensates for if you need glasses or not.So just as it may be difficult to focus your eyes when wearing grandad's glasses, if the diopter adjustment has been knocked off center it maybe impossible for you to see anything clearly in the viewfinder too.

This is normally located on the back of the finder, and you'll see a little wheel with a + and - on it ... as in this figure.

When focusing (if you haven't ever done it before) keep the lens wide open (f1.8 on this lens) for easiest and brightest view. Focus by turning the lens as I did in my above video and when the subject looks sharp then its focused.

Don't forget, those numbers with m and ft on the lens are actually accurate. You can measure (or guess) the distance to your subject and 'prefocus' the lens to that point and just take.

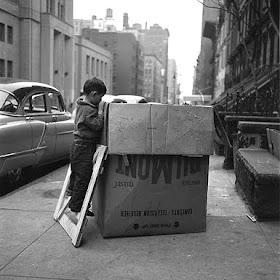

Heaps of photographers who like to "snap on the street" use exactly that technique for their candid photography (when you haven't got time to focus but you want to get it right).

Heaps of photographers who like to "snap on the street" use exactly that technique for their candid photography (when you haven't got time to focus but you want to get it right).Stuff like this candid from the 1950's by Vivian Maier were undoubtedly done in just this manner.

For example, from Philip Greenspun's pages on photogrpahy we find this advice on zone focusing:

"The classic technique for street photography consists of fitting a wide (20mm on a full-frame camera) or moderately wide-angle (35mm) lens to a camera, setting the ISO to a moderate high speed (400 or 800), and pre-focusing the lens."Its easier with wide angle lenses of course ... but if the subject is further away (like 5 meters) its not so hard ... either way its something worthwhile to know about

Simple.

To me the greatest benefits of these lenses come between f1.8 and f5.6 ... I prefer to use these lenses at f1.8 through to 2.8. The image may look a little nicer at 2.8 but 1.8 will give you a little more than 1 stop more shutter speed (thus these lenses are called fast lenses). That can make the difference between needing 1/30th of a second and getting 1/90th ... if your subject won't sit still that'll make all the difference.

Its actually still bright enough to focus at f4 on the lens (if you ask me) so you don't need to open and close it all the time, but if you're going to close down to 5.6 or smaller then you may as well use a zoom anyway.

The real advantage of these lenses is how bright (and thus how fast) they are as well as their lovely shallow depth of field, so keep it at 1.8 or 2.8. for maximum benefit :-)

Taken with my 50mm wide open (that means f1.8 ;-)