important "pre" POST SCRIPT:

this morning I took a photo off the balcony which I put the camera into HDR and I got an interesting shot, I say interesting because I took two shots and attempted to make a proper HDR. In doing this assembly, I found that there were actually interesting artifacts in one image image which is familiar to anyone who makes HDR images by hand ... movement artifacts. To Wit:

the double image or "ghosting" of the wires, and support and the birds is quite similar to image overlay artifacts. You may note that I had to zoom in in my viewer to 214% to make them clear, but to me they seem to match the "pattern" I know so well. This suggests to me that it may actually be taking 2 or more images and blending them ... which would also explain the oddness I find below with the ISO as it may be recording only the lower of ISO's used in taking two images. This is because ISO shift is another method (shutter speed is the other) for gaining the two exposures. If you take two images and combine them what do you do with the data of each? Usually ignore it, or perhaps use one of them.

More Testing Needed

So now later in the day more testing is done: I've taken a shot in full sunlight (to force a high shutter speed) of a friend rapidly moving his hands. That should test any HDR algorithm that composites 2 or more images as this will reveal movement ghosting were it present.

Now, examining that image carefully I found just such a ghosting artifact over on Kevs left hand:

So this answers the question of "is the Oppo HDR a proper HDR" ... yes it is.

To be clear the image above has sufficiently high shutter to preclude "motion blur" with the speed that Kev can move his hands at:

Filename - IMG20160621100231.jpg

Make - OPPO

Model - F1f

ExposureTime - 1/1503 seconds

FNumber - 2.20

ISOSpeedRatings - 100

What it doesn't solve is why a tone mapped single image from a RAW file actually gives a better result ... except to say that the JPG writing engine on this phone is crummy.

Eg from my earlier post:

Standard JPG

So HDR is a big improvement over the standard JPG, but you know it could be much better, it could be as good as Snapseed made from a single exposure.

Why Oppo? Why cripple your camera module with this crappy JPG engine.

The rest of this post now serves to show what benefits you can get from using HDR mode in many situations, for most owners will be barely wanting to even engage another mode like HDR, let alone bugger about with RAW and have an app like Snapseed on their phone (or drag it across to their PC and edit it there).

Why Oppo? Why cripple your camera module with this crappy JPG engine.

The rest of this post now serves to show what benefits you can get from using HDR mode in many situations, for most owners will be barely wanting to even engage another mode like HDR, let alone bugger about with RAW and have an app like Snapseed on their phone (or drag it across to their PC and edit it there).

carrying on from my previous post on this topic I thought I'd put the Oppo on a support and without it moving take two shots in identical light to answer the question of:

That could be part of the algorithm or it could just be that it "picked differently" due to slightly different composition ...So forgive my lack of foreplay and lets just get to it. So with the phone on the support I took these two shots. The left is "default" and the right is HDR

I know which one I prefer ... now, as I did nothing more than trigger the shutter button, change mode and again trigger the shutter button. They are both "undisturbed" and identical compositions, the differences therefore are down to the algorithm chosen as a result of the camera programming.

Lets look in more detail, top left corner:

where I see not only brighter, but more smudging from noise reduction algorithms. But...

better contrast and details on the HDR, but

slightly more "oversharpening" on the text on the HDR. Interestingly the wood textures came out better on the HDR

Yet, whites were unsaturated in either:

and if you ask me, the whites are better represented as whites (not greys as in the left hand "normal" image). When I did a course on photography many decades ago one of the early printing assignments (ohh, and we were using film and darkroom printing) was to photograph something white with texture and print it so that it looked white with texture still.

To my eye the right hand (the HDR) side looks better.

So lets look at the data from the EXIF:

Filename - IMG20160619175720.jpg IMG20160619175704.jpg

...

ExposureTime - 1/10 seconds 1/10 seconds

FNumber - 2.20 2.20

ISOSpeedRatings - 2007 800

So the primary settings are the same, but ISO is about 1.5 stops higher on the "normal" shot when compared to the HDR shot. Since the shutter speeds are the same, this should mean that the normal shot would be brighter than the "HDR" shot which it isn't.

So what's going on?

To answer this we need to get a little into the issues of ISO and dynamic range. Normally as you squeeze more sensitivity out of a sensor (IE increasing the ISO) what happens is that dynamic range (the total difference between floor noise and clipping) falls. This is well known.So what this suggests to me is that default camera settings attempt to push up the ISO, and squeeze the sensor captured dynamic range into a tighter band which is more compatible with the dynamic range of JPG (which is restricted to 8 bits per channel).

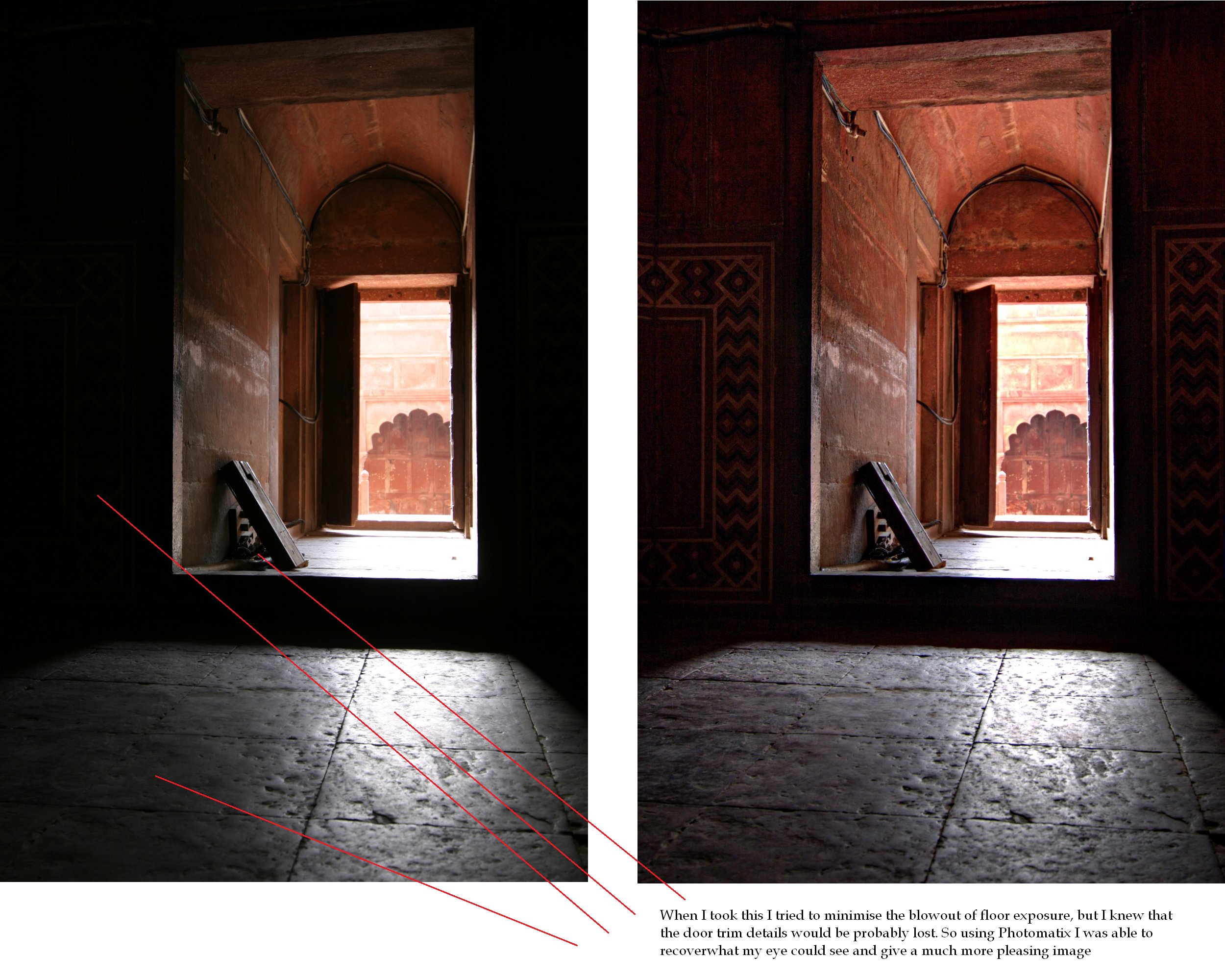

This image (taken with my 10D in India some years back) is a good example of what better software can make of a RAW file, which does not clip the whites and (by dint of being RAW) preserves the entire capture of data including the shadows. Note the better shadow details while maintaining the highlights

This is exactly what good software should do, to properly tonemap the RAW capture (often 12 bits) into the 8 bit space of the JPG ... the HDR mode on the Oppo is doing an acceptable job of this, but needs (it seems) two exposures to do it, while the standard software algorithm in the camera is not doing that very well at all.

The above shot from India was taken with the Canon 10D camera, which was introduced in 2003 ... so its no wonder that by 2009 when I used Photomatix on that same RAW image I was able to pull a "better" shot out of it.

What is less forgivable (or understandable) is why Oppo is shooting their own product in the foot before sending it out.

Clearly they have the HDR mode (because it was shipped with the phone),and clearly it does a better job of rendering a scene into a photograph.

Given that 90% of reviews don't have a clue, and 90% of buyers don't fiddle with settings, I have to ask: why the hell isn't this the standard mode?

Now the question I posed in the "pre post script" at the top combined with these and previous findings raises some interesting questions.

- now that we have established that HDR is taking more than one shot, why is it so bad?

- given that the capacity of the RAW file from a single shot demonstrates that the camerahas enough details and dynamic range (here , here then at the end here) then why is it doing such a bad job of the JPG when in HDR or Standard mode?

Are Oppo wanting to undercut their own product?

Myself I'd want audience reception to be great from day 1 ... not tepid and critical as I read on the web.

outtakes

For those of us who have the F1 and like to use our phones to take photographs I offer you this:set HDR mode every time you take a shot (or if you're a fiddler use RAW as I've already shown how much better that is in the previous post).

Shalom

No comments:

Post a Comment